1705signature: timer(initialDelay: number Date, period: number, scheduler: Scheduler): Observable. in curly braces the parametesrs like cidr_block = "10.0.0.0/16" It produces a strong airflow on the highest option. Terraform main operations and components are: The Airflow scheduler monitors all tasks and DAGs.Behind the scenes, it spins up a subprocess, which monitors and stays in sync with a folder for all DAG objects it may contain, and periodically (every minute or so) collects DAG parsing results and inspects active tasks to see whether they can be triggered. This file will have ALL resources created by terraform Continuing with the set up Next is to start the scheduler. State file terraform.tfstatewe can see locally all that we created with terraform!

Terraform planwill show what's its going to doĬhecks stateGet developer style diff before commit Timetables allow users to create their own custom schedules using Python, effectively eliminating the limitations. Scheduler, The Airflow scheduler monitors all tasks and all DAGs and triggers the Task The first DAG Run is created based on the minimum startdate for the. The DAGs list may not update, and new tasks will not be scheduled. Last heartbeat was received 5 minutes ago. Timetables, released in Airflow 2.2, brought new flexibility to scheduling. When there is a task running, Airflow will pop a notice saying the scheduler does not appear to be running and it kept showing until the task finished: The scheduler does not appear to be running. We can run this command after created main.tf Historically, Airflow users could schedule their DAGs by specifying a scheduleinterval with a cron expression, a timedelta, or a preset Airflow schedule.

In curly braces the parametesrs like cidr_block = "10.0.0.0/16" Terraform pattern consistentresource keyword

#Airflow scheduler code

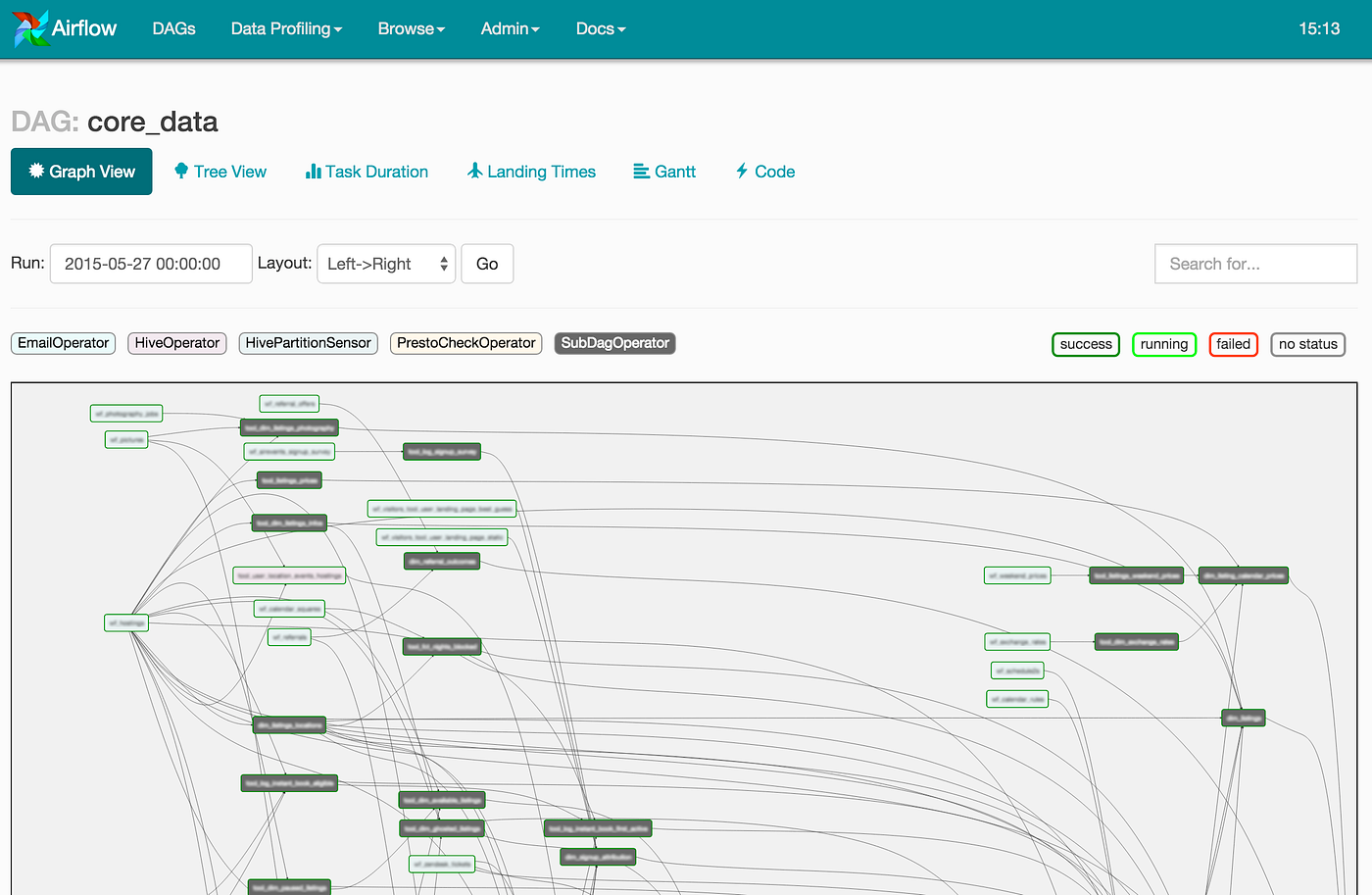

# The DAG object we'll need this to instantiate a DAGįrom main operations such as init plan apply and destroy and the state file telephone is a infrastructure as code tool a command line to help us create infrastructure with standard adjacent files and then run telephone apply in order to actually create this infrastructure either in AWS cloud or Google cloud or azure cloud or any other cloud provider even locally and it has many plugins. Apache Airflow is an open-source tool for orchestrating complex workflows and data processing pipelines. Our python script’s contents are reproduced below (to check for syntax issues just run the py file on the commandline): # Starting in Airflow 2.0, trying to overwrite a task will raise an exception. pullPolicy, Airflow Scheduler image pull policy, IfNotPresent. Users/theja/miniconda3/envs/datasci-dev/lib/python3.7/site-packages/airflow/models/dag.py:1342: PendingDeprecationWarning: The requested task could not be added to the DAG because a task with task_id create_tag_template_field_result is already in the DAG. Apache Airflow is a tool to express and execute workflows as directed acyclic graphs.

Avoid task scheduling during maintenance windows. INFO - Filling up the DagBag from /Users/theja/airflow/dags Low performance of the Airflow database might be the reason why the scheduler is slow. Long history short: everything was fine I then searched for the message in Apache Airflow Git and found a very similar bug: AIRFLOW-1156 BugFix: Unpausing a DAG with catchupFalse creates an extra DAG run. # visit localhost:8080 in the browser and enable the example dag in the home pageįor instance, when you start the webserver, you should seen an output similar to below: (datasci-dev) ttmac:lec05 theja$ airflow webserver -p 8080 I also checked on the airflow.cfg file, I checked the database connection parameter, task memory, and maxparalelism. # start the web server, default port is 8080 An issue with the Scheduler can prevent DAGs from being parsed and tasks. # but you can lay foundation somewhere else if you prefer The Apache Airflow Scheduler is a core component of Apache Airflow. From the quickstart page # airflow needs a home, ~/airflow is the default,

#Airflow scheduler install

Lets install the airflow package and get a server running. The Airflow Scheduler reads the data pipelines represented as Directed Acyclic Graphs (DAGs), schedules the contained tasks, monitors the task. As part of Apache Airflow 2.0, a key area of focus has been on the Airflow Scheduler. As listed above, a key benefit with airflow is that it allows us to describe a ML pipeline in code (and in python!).Īirflow works with graphs (spcifically, directed acyclic graphs or DAGs) that relate tasks to each other and describe their ordering.Įach node in the DAG is a task, with incoming arrows from other tasks implying that they are upstream dependencies. A technical deep-dive into Apache Airflow's refactored Scheduler, now significantly faster and ready for scale.Orchestration using ECS and ECR - Part II

0 kommentar(er)

0 kommentar(er)